-

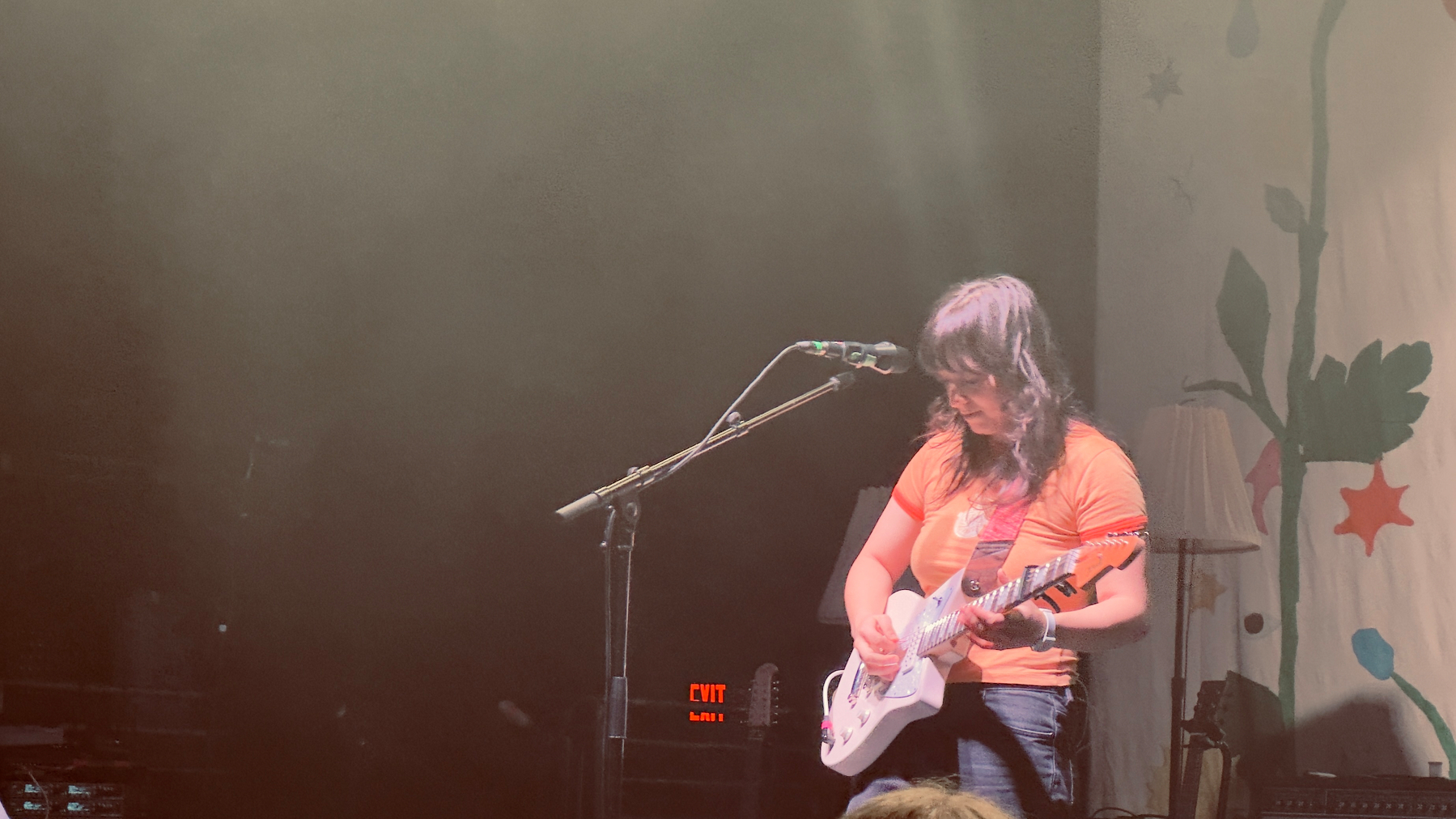

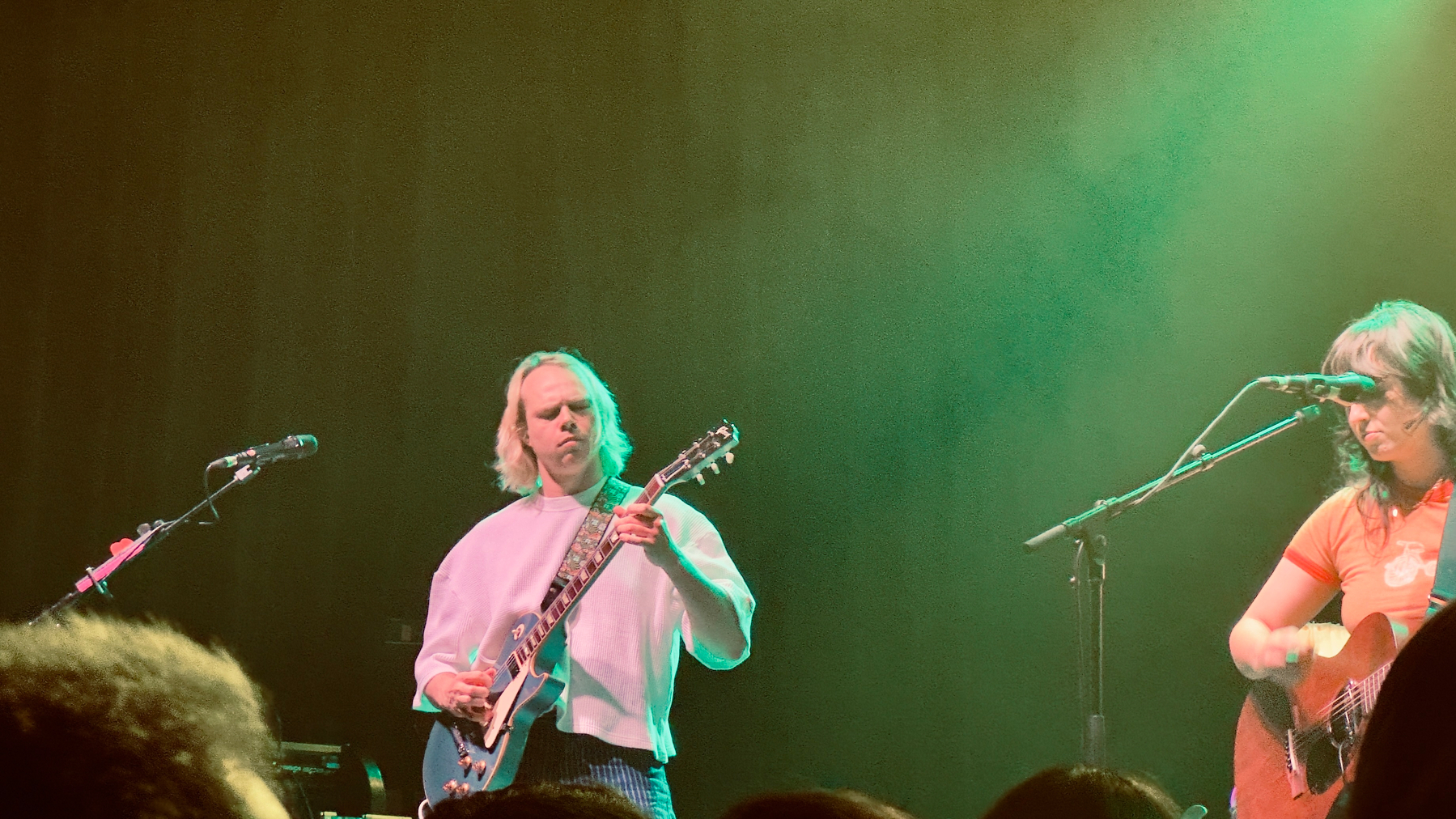

Seeing @thebeths.bsky.social and @phoeberings.bsky.social was a highlight of my weekend. Great show! #Philly #Music

-

What time is it? #LiquidGlass.

-

-

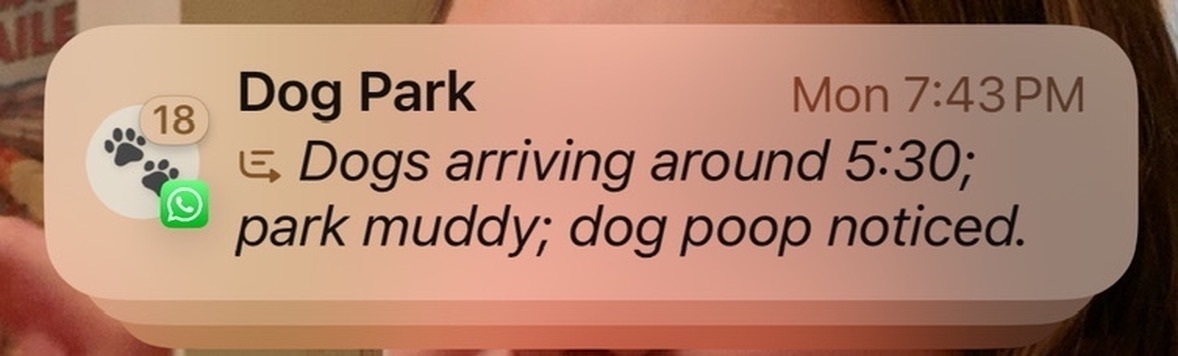

Another example of iOS 26 Liquid Glass usability issues.

-

Manamana

-

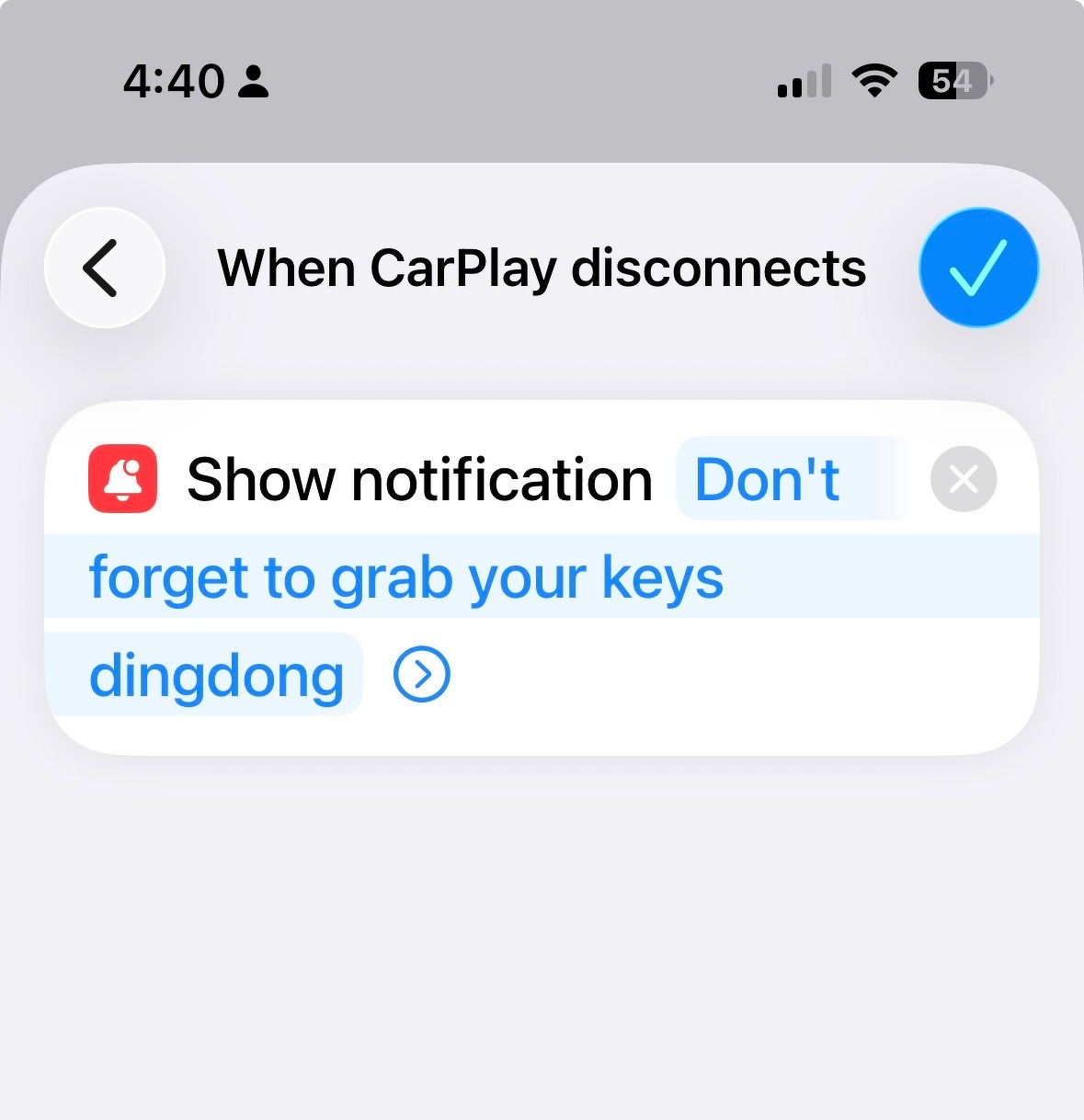

My new favorite iOS Shortcut? A reminder to grab my keys when I leave the car.

See the screenshots below.

-

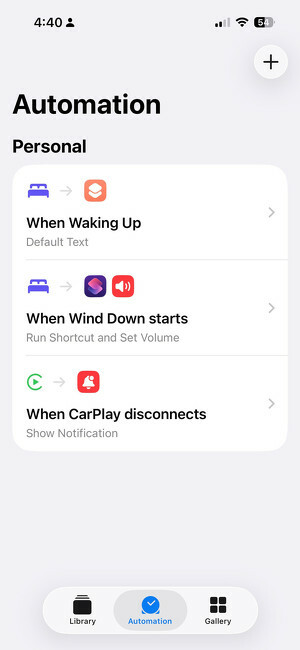

I really wish Google would do something about GCal spam. I get a fake calendar invite in my calendar at least once a week.

This time, the knucklehead’s website showed some Google reviews. So I decided to oblige.

EDIT: Turns out there is a setting on Google Calendar for this.

-

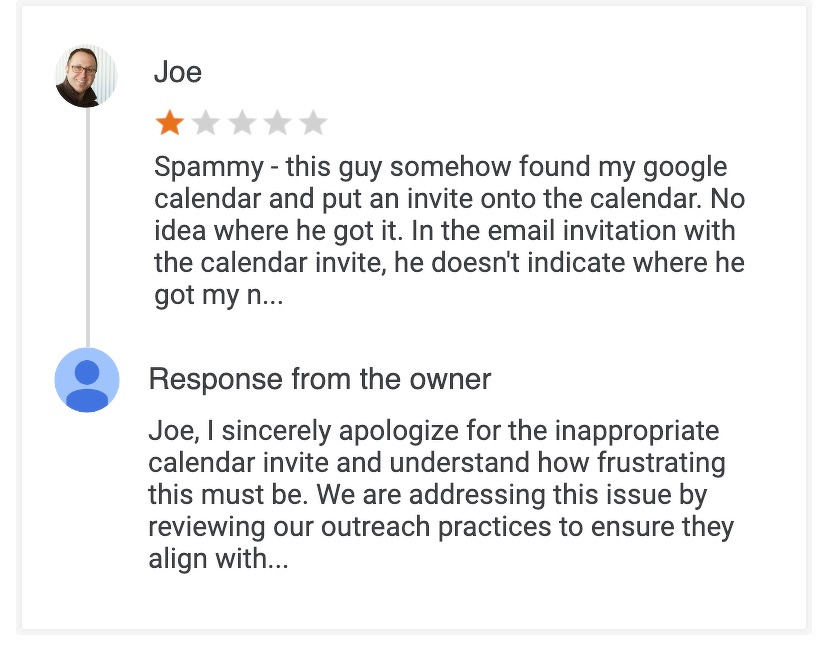

Is this a new SMS spam vector? I tend to get about 3 - 4 a week. It seems to have started In September.

-

Call me crazy but demolishing a chunk of the White House for a $240M gilded ballroom doesn’t seem like the act of someone who thinks he’ll be out of office in 4 years.

-

If I ever become famous and do the talk show circuit, I’m using Y&T’s “Summertime Girls” the way Paul Rudd used Mac and Me.

-

My dog Barnaby has directed his 300 million olfactory receptors exclusively to detecting pizza.

Grabbed cold slice from fridge. He arrived from upstairs into my office right behind me just as I was sitting down.

This guy can smell pizza through time and space.

#dogs #pizza

-

Finally got a chance to go to Laurel Hill in Philadelphia

-

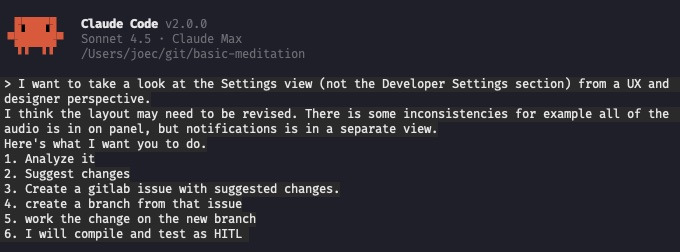

I wanted to do an update to the Settings screen in my new app Minimalist Meditation

So, before I started making dinner, this is what I asked Claude to do.

This is the future.

#indiedeveloper #ios #meditation

-

Wow, amazing show Saturday night with Elbow @ Brooklyn Bowl in Philadelphia. We had a great spot on the side of the stage.

Here’s the set list music.apple.com/us/playli…

-

Oh the irony although to be fair working remotely, using Teams does kind of suck.

-

The Apple Watch Sleep Score is like the James Suckling wine reviews. It’s looks like a 0 - 100 scale but we really know it’s a 90 - 100 scale.

Last night: Went to bed at a 11, finally fell asleep around 1.

Today: Apple Notification “Excellent Sleep Score - 93”

-

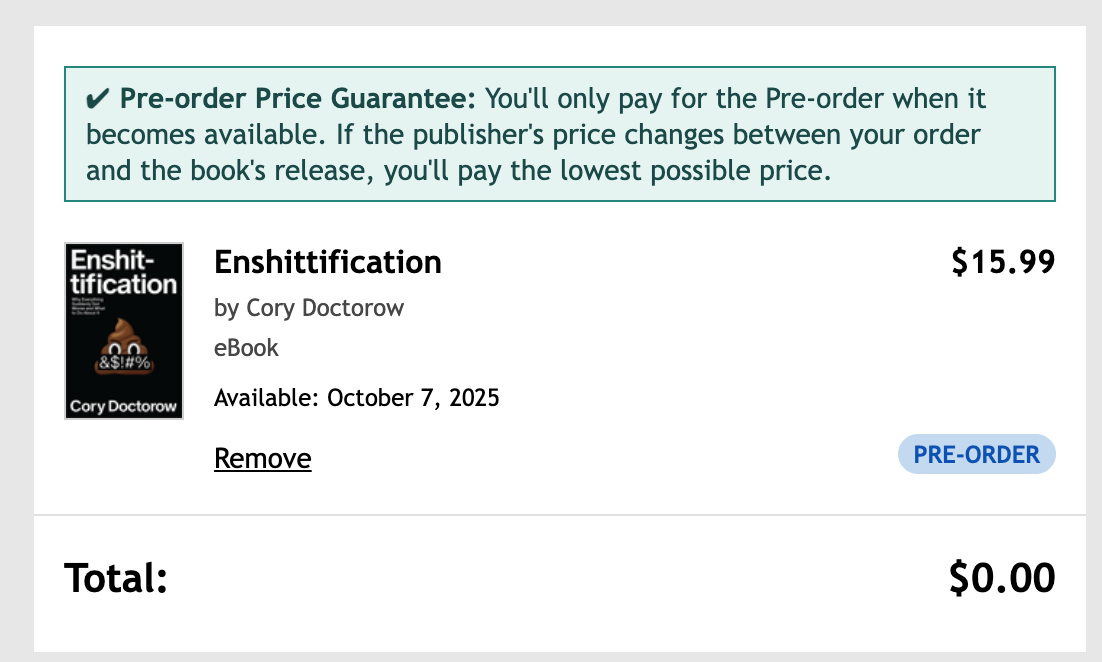

I just listened to a recent interview with Cory Doctrow on a TWiT podcast and immediately pre-ordered Enshittification from @pluralistic.

-

Damn another cacio e pepe fail. I can’t seem to get the cheese consistency right. I think this time the pasta was too hot ~174 degrees w/ a infrared thermometer.

#cooking #romanpastas #food

-

Called Visible because Apple Watch cellular data hasn’t been working. They fixed it by having me restart my phone and assured me that they didn’t do anything else on their side.

Disconnect from support head out the door and realized that now my iPhone has no cellular service.

-

Waymo spotted on 76 in #Philadelphia.

-

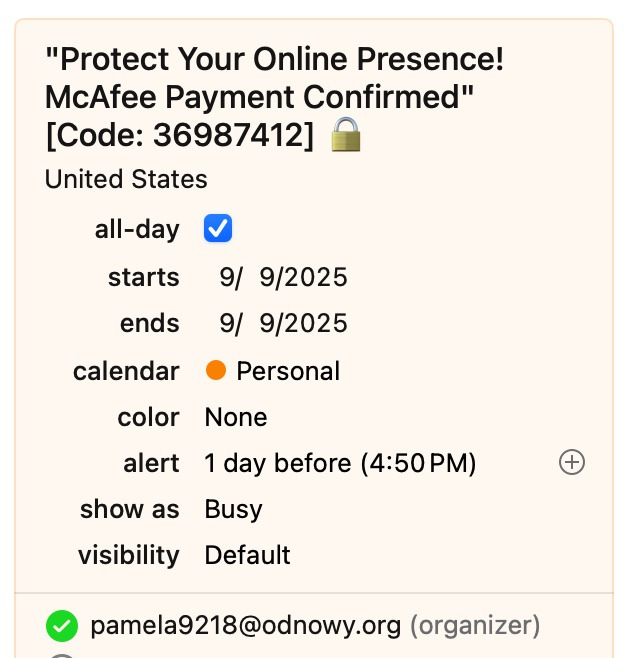

Is it irony that I just received a spammy calendar invite about protecting my online presence?

-

Copilot for Word is less than helpful. I ask it a question which should be contextually bound to Word by the Copilot system prompt. Instead, I’m given a generic answer about pasting into “some editors”

Hey @Microsoft happy to work on your system prompts. #smh #ai #copilot

-

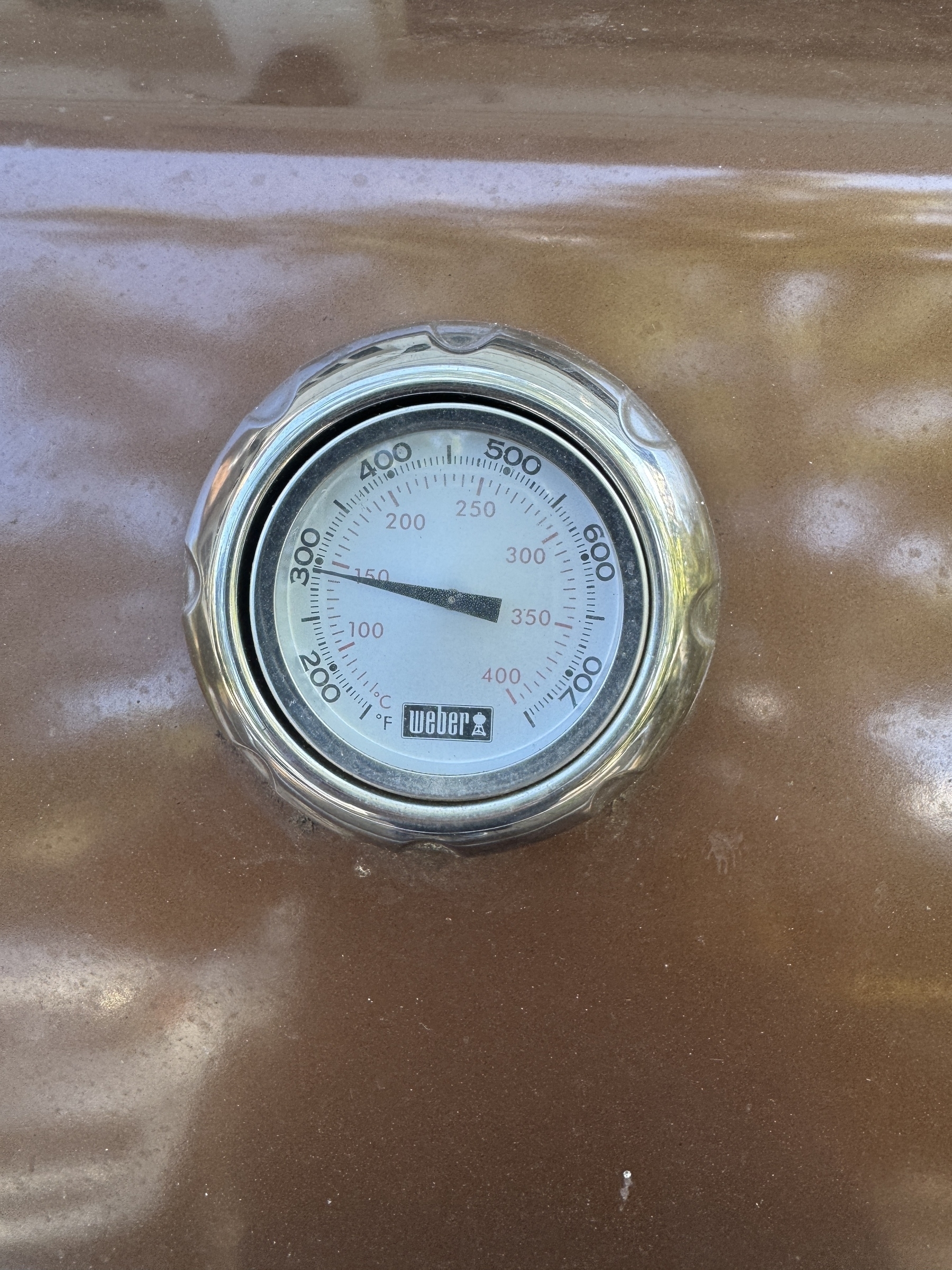

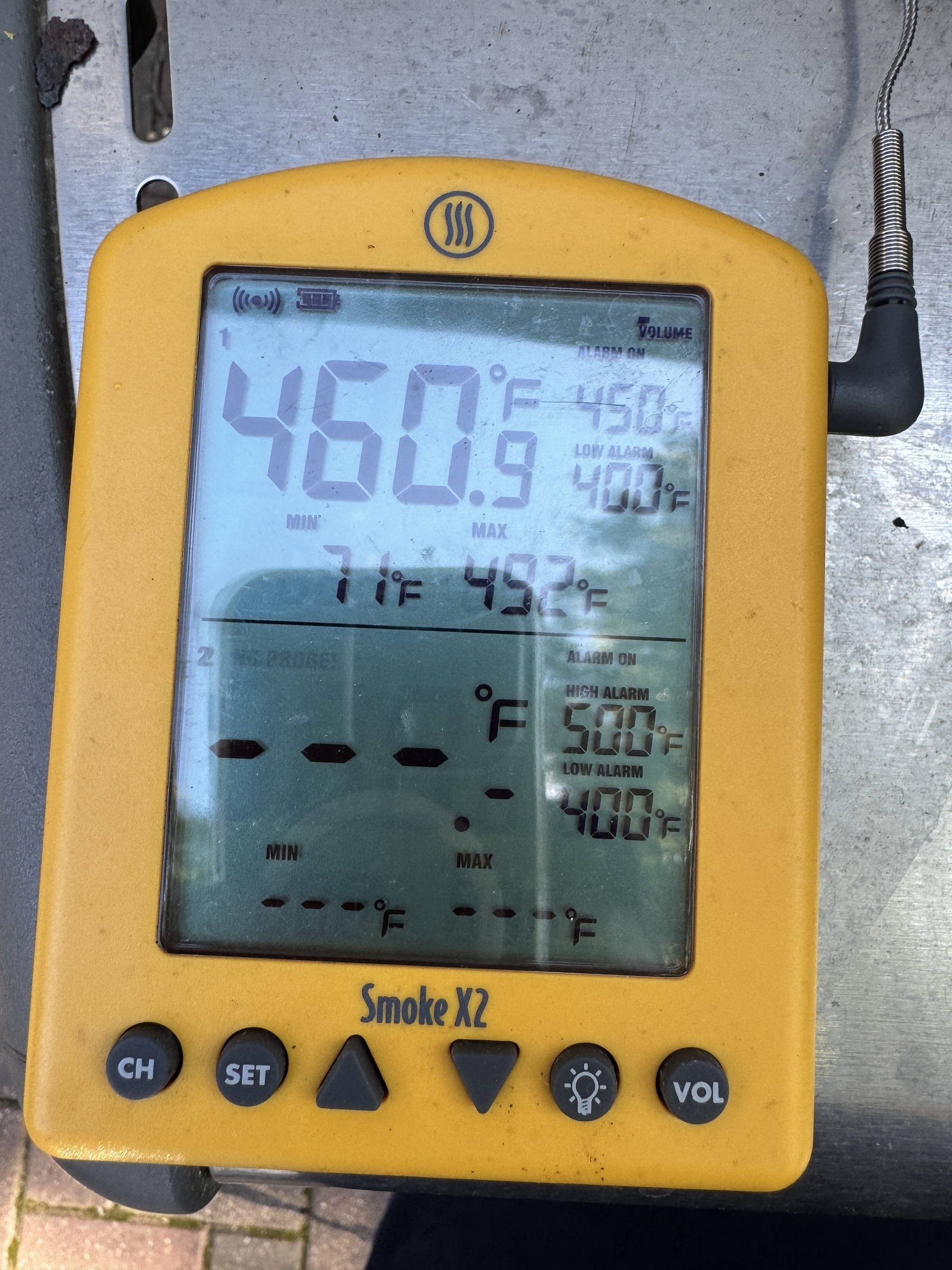

Wow, the Weber thermometer built into the grill is very inaccurate. #cooking #grilling

-

I didn’t realize @Target was really that anti-labor.

#laborday

-

Oh Apple Intelligence you never disappoint.

subscribe via RSS